Serverless architecture, in a nutshell, removes the need for developers to manage servers. Servers are still running in the background but the burden of maintaining and scaling their infrastructure is shifted to the cloud provider. Developers are able to streamline their code significantly right down to specific functions and typically take advantage of an “on-demand” cost model where they only pay for resources when a function is executed.

A function runs when API endpoints trigger events, which enables applications with unpredictable or rapidly changing states to scale horizontally with functions abstracted from the primary application. In practice, a function might be triggered when a shopper selects a product on an ecommerce site, designed to check a database to see if that product is in stock and pull the most up to date pricing information.

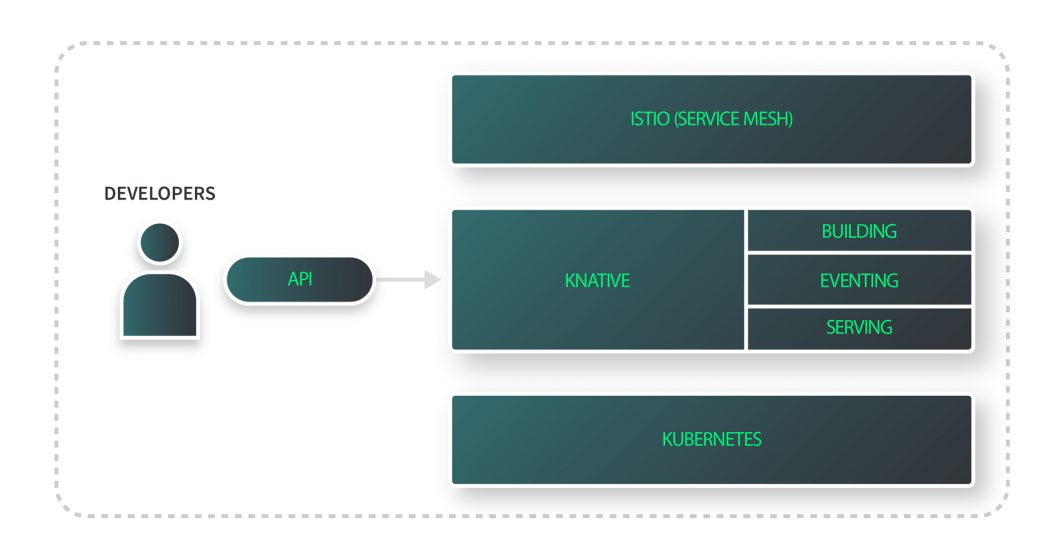

Knative is a powerful functions toolset built on top of Kubernetes for managing serverless applications. Knative enables your Kubernetes cluster to scale pods to zero while still making resources available so pods can scale up when needed.

Knative supports events and triggers that can be customized to control how your application responds. It’s a portable, vendor-neutral tool, so you can use it with your preferred managed Kubernetes service (like our own Linode Kubernetes Engine), or install on a local cluster. Together, Kubernetes and this installable functions platform optimize the state management and self-healing benefits of running an application on Kubernetes.

Knative provides:

- Automatic Scaling: Knative provides automatic scaling of pods based on traffic and demand, including scaling to zero. This improves resource utilization and reduces costs.

- Event-Driven Compute: Knative allows serverless workloads to respond to events and triggers.

- Portability: Knative is designed to work across different cloud providers and environments. This allows developers to deploy serverless applications to different environments without code modifications.

- Extensibility: Knative provides a set of building blocks that can be customized to meet specific application requirements.

- Enterprise Scalability: Knative is trusted by companies like Puppet and Outfit7.

How It Works

Knative’s functionality is split into Knative Eventing and Knative Serving.

- Eventing: Collection of APIs that enable sinks, or routing events from event producers to event consumers, via HTTP POST requests.

- Serving: Defining a set of objects as Kubernetes Custom Resources Definitions (CRDs), or creating an extension of the Kubernetes API. This determines how a serverless workload interacts with your Kubernetes cluster with the following resources.

- Routes: Network endpoint mapping to Revisions endpoints and traffic management.

- Configurations: Maintains your desired state as a separate layer from your code.

- Revisions: A snapshot of your code and configuration for every change and modification made.

- Services: Workload management that controls object creation and ensures that your app continuously has a route, configuration, and revision to either the latest revision, or a specific revision. Knative uses the Istio gateway service by default.

You can install Knative to your cluster using YAML, or the Knative Operator for Kubernetes. There are also Knative Helm charts submitted by Kubernetes community members. Knative has a quickstart environment, but that’s only recommended for testing purposes.

Getting Started on Akamai Cloud

Knative is an excellent complement to LKE’s built-in autoscaler, which allows you to easily control minimum and maximum Nodes within a cluster’s node pool. Using Knative and the autoscaler together provides finely tuned management both at the pod and infrastructure levels.

And to help you get started, we have a new on-demand course for that! We teamed up with Justin Mitchel of Coding for Entrepreneurs on our new Try Knative on-demand course, available on February 28, 2023. This video series includes creating a Kubernetes cluster using Terraform, configuring a Knative service, and deploying a containerized application.

Comments (1)

It was helpful