Product docs and API reference are now on Akamai TechDocs.

Search product docs.

Search for “” in product docs.

Search API reference.

Search for “” in API reference.

Search Results

results matching

results

No Results

Filters

Use Varnish & NGINX to Serve WordPress over SSL & HTTP on Debian 8

Traducciones al EspañolEstamos traduciendo nuestros guías y tutoriales al Español. Es posible que usted esté viendo una traducción generada automáticamente. Estamos trabajando con traductores profesionales para verificar las traducciones de nuestro sitio web. Este proyecto es un trabajo en curso.

Varnish is a powerful and flexible caching HTTP reverse proxy. It can be installed in front of any web server to cache its contents, which will improve speed and reduce server load. When a client requests a webpage, Varnish first tries to send it from the cache. If the page is not cached, Varnish forwards the request to the backend server, fetches the response, stores it in the cache, and delivers it to the client.

When a cached resource is requested through Varnish, the request doesn’t reach the web server or involve PHP or MySQL execution. Instead, Varnish reads it from memory, delivering the cached page in a matter of microseconds.

One Varnish drawback is that it doesn’t support SSL-encrypted traffic. You can circumvent this issue by using NGINX for both SSL decryption and as a backend web server. Using NGINX for both tasks reduces the complexity of the setup, leading to fewer potential points of failure, lower resource consumption, and fewer components to maintain.

Both Varnish and NGINX are versatile tools with a variety of uses. This guide uses Varnish 4.0, which comes included in Debian 8 repositories, and presents a basic setup that you can refine to meet your specific needs.

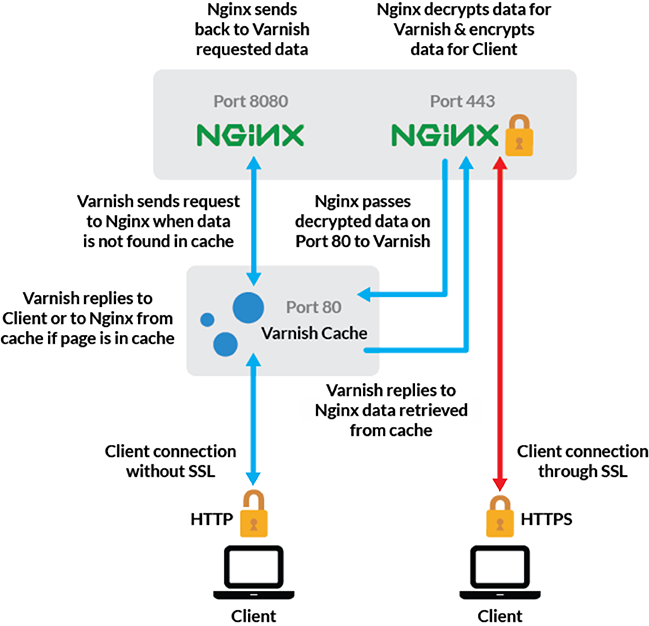

How Varnish and NGINX Work Together

In this guide, we will configure NGINX and Varnish for two WordPress sites:

www.example-over-http.comwill be an unencrypted, HTTP-only site.www.example-over-https.comwill be a separate, HTTPS-encrypted site.

For HTTP traffic, Varnish will listen on port 80. If content is found in the cache, Varnish will serve it. If not, it will pass the request to NGINX on port 8080. In the second case, NGINX will send the requested content back to Varnish on the same port, then Varnish will store the fetched content in the cache and deliver it to the client on port 80.

For HTTPS traffic, NGINX will listen on port 443 and send decrypted traffic to Varnish on port 80. If content is found in the cache, Varnish will send the unencrypted content from the cache back to NGINX, which will encrypt it and send it to the client. If content is not found in the cache, Varnish will request it from NGINX on port 8080, store it in the cache, and then send it unencrypted to frontend NGINX, which will encrypt it and send it to the client’s browser.

Our setup is illustrated below. Please note that frontend NGINX and backend NGINX are one and the same server:

Before You Begin

This tutorial assumes that you have SSH access to your Linode running Debian 8 (Jessie). Before you get started:

Complete the steps in our Creating a Compute Instance and Setting Up and Securing a Compute Instance guide. You’ll need a standard user account with

sudoprivileges for many commands in this guide.Follow the steps outlined in our LEMP on Debian 8 guide. Skip the NGINX configuration section, since we’ll address it later in this guide.

After configuring NGINX according to this guide, follow the steps in our WordPress guide to install and configure WordPress. We’ll include a step in the instructions to let you know when it’s time to do this.

Install and Configure Varnish

For all steps in this section, replace 203.0.113.100 with your Linodes public IPv4 address, and 2001:DB8::1234 with its IPv6 address.

Update your package repositories and install Varnish:

sudo apt-get update sudo apt-get install varnishOpen

/etc/default/varnishwith sudo rights. To make sure Varnish starts at boot, underShould we start varnishd at boot?set theSTARTtoyes:- File: /etc/default/varnish

START=yes

In the

Alternative 2section, make the following changes toDAEMON_OPTS:- File: /etc/default/varnish

DAEMON_OPTS="-a :80 \ -T localhost:6082 \ -f /etc/varnish/custom.vcl \ -S /etc/varnish/secret \ -s malloc,1G"

This will set Varnish to listen on port

80and will instruct it to use thecustom.vclconfiguration file. The custom configuration file is used so that future updates to Varnish do not overwrite changes todefault.vcl.The

-s malloc,1Gline sets the maximum amount of RAM that will be used by Varnish to store content. This value can be adjusted to suit your needs, taking into account the server’s total RAM along with the size and expected traffic of your website. For example, on a system with 4 GB of RAM, you can allocate 2 or 3 GB to Varnish.When you’ve made these changes, save and exit the file.

Create a Custom Varnish Configuration File

To start customizing your Varnish configuration, create a new file called

custom.vcl:sudo touch /etc/varnish/custom.vclVarnish configuration uses a domain-specific language called Varnish Configuration Language (VCL). First, specify the VCL version used:

- File: /etc/varnish/custom.vcl

vcl 4.0;

Specify that the backend (NGINX) is listening on port

8080, by adding thebackend defaultdirective:- File: /etc/varnish/custom.vcl

backend default { .host = "localhost"; .port = "8080"; }

Allow cache-purging requests only from localhost using the

acldirective:- File: /etc/varnish/custom.vcl

acl purger { "localhost"; "203.0.113.100"; "2001:DB8::1234"; }

Remember to substitute your Linode’s actual IP addresses for the example addresses.

Create the

sub vcl_recvroutine, which is used when a request is sent by a HTTP client.- File: /etc/varnish/custom.vcl

sub vcl_recv { }

The settings in the following steps should be placed inside the

sub vcl_recvbrackets:Redirect HTTP requests to HTTPS for our SSL website:

- File: /etc/varnish/custom.vcl

if (client.ip != "127.0.0.1" && req.http.host ~ "example-over-https.com") { set req.http.x-redir = "https://www.example-over-https.com" + req.url; return(synth(850, "")); }

Remember to replace the example domain with your own.

Allow cache-purging requests only from the IP addresses in the above

acl purgersection (Step 4). If a purge request comes from a different IP address, an error message will be produced:- File: /etc/varnish/custom.vcl

if (req.method == "PURGE") { if (!client.ip ~ purger) { return(synth(405, "This IP is not allowed to send PURGE requests.")); } return (purge); }

Change the

X-Forwarded-Forheader:- File: /etc/varnish/custom.vcl

if (req.restarts == 0) { if (req.http.X-Forwarded-For) { set req.http.X-Forwarded-For = client.ip; } }

Exclude POST requests or those with basic authentication from caching:

- File: /etc/varnish/custom.vcl

if (req.http.Authorization || req.method == "POST") { return (pass); }

Exclude RSS feeds from caching:

- File: /etc/varnish/custom.vcl

if (req.url ~ "/feed") { return (pass); }

Tell Varnish not to cache the WordPress admin and login pages:

- File: /etc/varnish/custom.vcl

if (req.url ~ "wp-admin|wp-login") { return (pass); }

WordPress sets many cookies that are safe to ignore. To remove them, add the following lines:

- File: /etc/varnish/custom.vcl

set req.http.cookie = regsuball(req.http.cookie, "wp-settings-\d+=[^;]+(; )?", ""); set req.http.cookie = regsuball(req.http.cookie, "wp-settings-time-\d+=[^;]+(; )?", ""); if (req.http.cookie == "") { unset req.http.cookie; }

Note This is the final setting to be placed inside thesub vcl_recvroutine. All directives in the following steps (from Step 6 onward) should be placed after the closing last bracket.

Redirect HTTP to HTTPS using the

sub vcl_synthdirective with the following settings:- File: /etc/varnish/custom.vcl

sub vcl_synth { if (resp.status == 850) { set resp.http.Location = req.http.x-redir; set resp.status = 302; return (deliver); } }

Cache-purging for a particular page must occur each time we make edits to that page. To implement this, we use the

sub vcl_purgedirective:- File: /etc/varnish/custom.vcl

sub vcl_purge { set req.method = "GET"; set req.http.X-Purger = "Purged"; return (restart); }

The

sub vcl_backend_responsedirective is used to handle communication with the backend server, NGINX. We use it to set the amount of time the content remains in the cache. We can also set a grace period, which determines how Varnish will serve content from the cache even if the backend server is down. Time can be set in seconds (s), minutes (m), hours (h) or days (d). Here, we’ve set the caching time to 24 hours, and the grace period to 1 hour, but you can adjust these settings based on your needs:- File: /etc/varnish/custom.vcl

sub vcl_backend_response { set beresp.ttl = 24h; set beresp.grace = 1h;

Before closing the

vcl_backend_responseblock with a bracket, allow cookies to be set only if you are on admin pages or WooCommerce-specific pages:- File: /etc/varnish/custom.vcl

if (bereq.url !~ "wp-admin|wp-login|product|cart|checkout|my-account|/?remove_item=") { unset beresp.http.set-cookie; } }

Remember to include in the above series any page that requires cookies to work, for example

phpmyadmin|webmail|postfixadmin, etc. If you change the WordPress login page fromwp-login.phpto something else, also add that new name to this series.Note The “WooCommerce Recently Viewed” widget, which displays a group of recently viewed products, uses a cookie to store recent user-specific actions and this cookie prevents Varnish from caching product pages when they are browsed by visitors. If you want to cache product pages when they are only browsed, before products are added to the cart, you must disable this widget.

Special attention is required when enabling widgets that use cookies to store recent user-specific activities, if you want Varnish to cache as many pages as possible.

Change the headers for purge requests by adding the

sub vcl_deliverdirective:- File: /etc/varnish/custom.vcl

sub vcl_deliver { if (req.http.X-Purger) { set resp.http.X-Purger = req.http.X-Purger; } }

This concludes the

custom.vclconfiguration. You can now save and exit the file. The finalcustom.vclfile will look like this.Note You can download the complete sample configuration file using the link above andwget. If you do, remember to replace the variables as described above.

Edit the Varnish Startup Configuration

For Varnish to work properly, we also need to edit the

/lib/systemd/system/varnish.servicefile to use our custom configuration file. Specifically, we’ll tell it to use the custom configuration file and modify the port number and allocated memory values to match the changes we made in our/etc/default/varnishfile.Open

/lib/systemd/system/varnish.serviceand find the two lines beginning withExecStart. Modify them to look like this:- File: /lib/systemd/system/varnish.service

ExecStartPre=/usr/sbin/varnishd -C -f /etc/varnish/custom.vcl ExecStart=/usr/sbin/varnishd -a :80 -T localhost:6082 -f /etc/varnish/custom.vcl -S /etc/varnish/secret -s malloc,1G

After saving and exiting the file, reload the

systemdprocess:sudo systemctl daemon-reload

Install and Configure PHP

Before configuring NGINX, we have to install PHP-FPM. FPM is short for FastCGI Process Manager, and it allows the web server to act as a proxy, passing all requests with the .php file extension to the PHP interpreter.

Install PHP-FPM:

sudo apt-get install php5-fpm php5-mysqlOpen the

/etc/php5/fpm/php.inifile. Find the directivecgi.fix_pathinfo=, uncomment and set it to0. If this parameter is set to1, the PHP interpreter will try to process the file whose path is closest to the requested path; if it’s set to0, the interpreter will only process the file with the exact path, which is a safer option.- File: /etc/php5/fpm/php.ini

1cgi.fix_pathinfo=0

After you’ve made this change, save and exit the file.

Open

/etc/php5/fpm/pool.d/www.confand confirm that thelisten =directive, which specifies the socket used by NGINX to pass requests to PHP-FPM, matches the following:- File: /etc/php5/fpm/pool.d/www.conf

listen = /var/run/php5-fpm.sock

Save and exit the file.

Restart PHP-FPM:

sudo systemctl restart php5-fpmOpen

/etc/nginx/fastcgi_paramsand find thefastcgi_param HTTPSdirective. Below it, add the following two lines, which are necessary for NGINX to interact with the FastCGI service:- File: /etc/nginx/fastcgi_params

1 2fastcgi_param SCRIPT_FILENAME $request_filename; fastcgi_param PATH_INFO $fastcgi_path_info;

Once you’re done, save and exit the file.

Configure NGINX

Open

/etc/nginx/nginx.confand comment out thessl_protocolsandssl_prefer_server_ciphersdirectives. We’ll include these SSL settings in the server block within the/etc/nginx/sites-enabled/defaultfile:- File: /etc/nginx/nginx.conf

# ssl_protocols TLSv1 TLSv1.1 TLSv1.2; # Dropping SSLv3, ref: POODLE # ssl_prefer_server_ciphers on;

Since the access logs and error logs will be defined for each individual website in the server block, comment out the

access_loganderror_logdirectives:- File: /etc/nginx/nginx.conf

# access_log /var/log/nginx/access.log; # error_log /var/log/nginx/error.log;

Save and exit the file.

Next, we’ll configure the HTTP-only website,

www.example-over-http.com. Begin by making a backup of the default server block (virtual host) file:sudo mv /etc/nginx/sites-available/default /etc/nginx/sites-available/default-backupOpen a new

/etc/nginx/sites-available/defaultfile and add the following blocks:- File: /etc/nginx/sites-available/default

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30server { listen 8080; listen [::]:8080; server_name example-over-http.com; return 301 http://www.example-over-http.com$request_uri; } server { listen 8080; listen [::]:8080; server_name www.example-over-http.com; root /var/www/html/example-over-http.com/public_html; port_in_redirect off; index index.php; location / { try_files $uri $uri/ /index.php?$args; } location ~ \.php$ { try_files $uri =404; fastcgi_split_path_info ^(.+\.php)(/.+)$; include fastcgi_params; fastcgi_index index.php; fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_pass unix:/var/run/php5-fpm.sock; } error_log /var/www/html/example-over-http.com/logs/error.log notice; }

A few things to note here:

- The first server block is used to redirect all requests for

example-over-http.comtowww.example-over-http.com. This assumes you want to use thewwwsubdomain and have added a DNS A record for it. listen [::]:8080;is needed if you want your site to be also accesible over IPv6.port_in_redirect off;prevents NGINX from appending the port number to the requested URL.fastcgidirectives are used to proxy requests for PHP code execution to PHP-FPM, via the FastCGI protocol.

To configure NGINX for the SSL-encrypted website (in our example we called it

www.example-over-https.com), you need two more server blocks. Append the following server blocks to your/etc/nginx/sites-available/defaultfile:- File: /etc/nginx/sites-available/default

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55server { listen 443 ssl; listen [::]:443 ssl; server_name www.example-over-https.com; port_in_redirect off; ssl on; ssl_certificate /etc/nginx/ssl/ssl-bundle.crt; ssl_certificate_key /etc/nginx/ssl/example-over-https.com.key; ssl_protocols TLSv1 TLSv1.1 TLSv1.2; ssl_ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!MD5:!DSS; ssl_prefer_server_ciphers on; ssl_session_cache shared:SSL:20m; ssl_session_timeout 60m; add_header Strict-Transport-Security "max-age=31536000"; add_header X-Content-Type-Options nosniff; location / { proxy_pass http://127.0.0.1:80; proxy_set_header Host $http_host; proxy_set_header X-Forwarded-Host $http_host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto https; proxy_set_header HTTPS "on"; access_log /var/www/html/example-over-https.com/logs/access.log; error_log /var/www/html/example-over-https.com/logs/error.log notice; } } server { listen 8080; listen [::]:8080; server_name www.example-over-https.com; root /var/www/html/example-over-https.com/public_html; index index.php; port_in_redirect off; location / { try_files $uri $uri/ /index.php?$args; } location ~ \.php$ { try_files $uri =404; fastcgi_split_path_info ^(.+\.php)(/.+)$; include fastcgi_params; fastcgi_index index.php; fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param HTTPS on; fastcgi_pass unix:/var/run/php5-fpm.sock; } }

For an SSL-encrypted website, you need one server block to receive traffic on port 443 and pass decrypted traffic to Varnish on port

80, and another server block to serve unencrypted traffic to Varnish on port8080, when Varnish asks for it.Important Thessl_certificatedirective must specify the location and name of the SSL certificate file. Take a look at our guide to using SSL on NGINX for more information, and update thessl_certificateandssl_certificate_keyvalues as needed.Alternately, if you don’t have a commercially-signed SSL certificate (issued by a CA), you can issue a self-signed SSL certificate using openssl, but this should be done only for testing purposes. Self-signed sites will return a “This Connection is Untrusted” message when opened in a browser.

Now, let’s review the key points of the previous two server blocks:

ssl_session_cache shared:SSL:20m;creates a 20MB cache shared between all worker processes. This cache is used to store SSL session parameters to avoid SSL handshakes for parallel and subsequent connections. 1MB can store about 4000 sessions, so adjust this cache size according to the expected traffic for your website.ssl_session_timeout 60m;specifies the SSL session cache timeout. Here it’s set to 60 minutes, but it can be decreased or increased, depending on traffic and resources.ssl_prefer_server_ciphers on;means that when an SSL connection is established, the server ciphers are preferred over client ciphers.add_header Strict-Transport-Security "max-age=31536000";tells web browsers they should only interact with this server using a secure HTTPS connection. Themax-agespecifies in seconds what period of time the site is willing to accept HTTPS-only connections.add_header X-Content-Type-Options nosniff;this header tells the browser not to override the response content’s MIME type. So, if the server says the content is text, the browser will render it as text.proxy_pass http://127.0.0.1:80;this directive proxies all the decrypted traffic to Varnish, which listens on port80.proxy_set_headerdirectives add specific headers to requests, so SSL traffic can be recognized.access_loganderror_logindicate the location and name of the respective types of logs. Adjust these locations and names according to your setup, and make sure thewww-datauser has permissions to modify each log.fastcgidirectives present in the last server block are necessary to proxy requests for PHP code execution to PHP-FPM, via the FastCGI protocol.

Optional: To prevent access to your website via direct input of your IP address into a browser, you can put a catch-all default server block right at the top of the file:

- File: /etc/nginx/sites-available/default

1 2 3 4 5 6 7server { listen 8080 default_server; listen [::]:8080; server_name _; root /var/www/html; index index.html; }

The

/var/www/html/index.htmlfile can contain a simple message like “Page not found!”Restart NGINX, then start Varnish:

sudo systemctl restart nginx sudo systemctl start varnishInstall WordPress, following our How to Install and Configure WordPress guide. Once WordPress is installed, continue with this guide.

After installing WordPress, restart Varnish to clear any cached redirects to the setup page:

sudo systemctl restart varnish

Install the WordPress “Varnish HTTP Purge” Plugin

When you edit a WordPress page and update it, the modification won’t be visible even if you refresh the browser because it will receive the cached version of the page. To purge the cached page automatically when you edit a page, you must install a free WordPress plugin called “Varnish HTTP Purge.”

To install this plugin, log in to your WordPress website and click Plugins on the main left sidebar. Select Add New at the top of the page, and search for Varnish HTTP Purge. When you’ve found it, click Install Now, then Activate.

Test Your Setup

To test whether Varnish and NGINX are doing their jobs for the HTTP website, run:

wget -SS http://www.example-over-http.comThe output should look like this:

--2016-11-04 16:48:43-- http://www.example-over-http.com/ Resolving www.example-over-http.com (www.example-over-http.com)... your_server_ip Connecting to www.example-over-http.com (www.example-over-http.com)|your_server_ip|:80... connected. HTTP request sent, awaiting response... HTTP/1.1 200 OK Server: nginx/1.6.2 Date: Sat, 26 Mar 2016 22:25:55 GMT Content-Type: text/html; charset=UTF-8 Link: <http://www.example-over-http.com/wp-json/>; rel="https://api.w.org/" X-Varnish: 360795 360742 Age: 467 Via: 1.1 varnish-v4 Transfer-Encoding: chunked Connection: keep-alive Accept-Ranges: bytes Length: unspecified [text/html] Saving to: \u2018index.html.2\u2019 index.html [ <=> ] 12.17K --.-KB/s in 0s 2016-03-27 00:33:42 (138 MB/s) - \u2018index.html.2\u2019 saved [12459]The third line specifies the connection port number:

80. The backend server is correctly identified:Server: nginx/1.6.2. And the traffic passes through Varnish as intended:Via: 1.1 varnish-v4. The period of time the object has been kept in cache by Varnish is also displayed in seconds:Age: 467.To test the SSL-encrypted website, run the same command, replacing the URL:

wget -SS https://www.example-over-https.comThe output should be similar to that of the HTTP-only site.

Note If you’re using a self-signed certificate while testing, add the

--no-check-certificateoption to thewgetcommand:wget -SS –no-check-certificate https://www.example-over-https.com

Next Steps

By using nginx in conjunction with Varnish, the speed of any WordPress website can be drastically improved while making best use of your hardware resources.

You can strengthen the security of the SSL connection by generating a custom Diffie-Hellman (DH) parameter, for a more secure cryptographic key exchange process.

An additional configuration option is to enable Varnish logging for the plain HTTP website, since now Varnish will be the first to receive the client requests, while NGINX only receives requests for those pages that are not found in the cache. For SSL-encrypted websites, the logging should be done by NGINX because client requests pass through it first. Logging becomes even more important if you use log monitoring software such as Fail2ban, Awstats or Webalizer.

More Information

You may wish to consult the following resources for additional information on this topic. While these are provided in the hope that they will be useful, please note that we cannot vouch for the accuracy or timeliness of externally hosted materials.

This page was originally published on