Latest 3.0 and swappiness

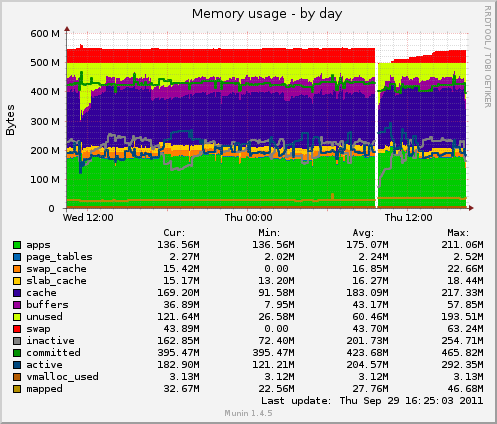

~~http://bryantrv.com/images/temp/memory-day.png

~~http://bryantrv.com/images/temp/memory-day.png

My kern log has a bunch of entries like this (though note the server was running fine at the time of this log entry):

Sep 29 16:26:16 linpear kernel: swapper: page allocation failure: order:5, mode:0x20

Sep 29 16:26:16 linpear kernel: Pid: 0, comm: swapper Not tainted 3.0.4-linode38 #1

Sep 29 16:26:16 linpear kernel: Call Trace:

Sep 29 16:26:16 linpear kernel: [<c018b258>] ? warn_alloc_failed+0x98/0x100

Sep 29 16:26:16 linpear kernel: [<c018baa4>] ? __alloc_pages_nodemask+0x3f4/0x630

Sep 29 16:26:16 linpear kernel: [<c01b22b3>] ? T.833+0xb3/0x2e0

Sep 29 16:26:16 linpear kernel: [<c01b2726>] ? cache_alloc_refill+0x246/0x290

Sep 29 16:26:16 linpear kernel: [<c01b282d>] ? __kmalloc+0xbd/0xd0

Sep 29 16:26:16 linpear kernel: [<c053a7fe>] ? pskb_expand_head+0x12e/0x200

Sep 29 16:26:16 linpear kernel: [<c066530d>] ? packet_rcv_spkt+0xfd/0x140

Sep 29 16:26:16 linpear kernel: [<c053ad3d>] ? __pskb_pull_tail+0x4d/0x2b0

Sep 29 16:26:16 linpear kernel: [<c0607ad3>] ? ipv4_confirm+0xf3/0x1b0

Sep 29 16:26:16 linpear kernel: [<c05436dd>] ? dev_hard_start_xmit+0x1dd/0x3e0

Sep 29 16:26:16 linpear kernel: [<c05c8020>] ? ip_finish_output2+0x260/0x260

Sep 29 16:26:16 linpear kernel: [<c05c8020>] ? ip_finish_output2+0x260/0x260

Sep 29 16:26:16 linpear kernel: [<c0557a62>] ? sch_direct_xmit+0xb2/0x170

Sep 29 16:26:16 linpear kernel: [<c05439d9>] ? dev_queue_xmit+0xf9/0x320

Sep 29 16:26:16 linpear kernel: [<c05c815b>] ? ip_finish_output+0x13b/0x300

Sep 29 16:26:16 linpear kernel: [<c05c83ca>] ? ip_output+0xaa/0xe0

Sep 29 16:26:16 linpear kernel: [<c05c7568>] ? ip_local_out+0x18/0x20

Sep 29 16:26:16 linpear kernel: [<c05daf25>] ? tcp_transmit_skb+0x385/0x670

Sep 29 16:26:16 linpear kernel: [<c05dd965>] ? tcp_write_xmit+0x1e5/0x4f0

Sep 29 16:26:16 linpear kernel: [<c05ddcd4>] ? __tcp_push_pending_frames+0x24/0x90

Sep 29 16:26:16 linpear kernel: [<c05d9a1b>] ? tcp_rcv_established+0xfb/0x610

Sep 29 16:26:16 linpear kernel: [<c057fb3c>] ? nf_iterate+0x6c/0x90

Sep 29 16:26:16 linpear kernel: [<c05e080e>] ? tcp_v4_do_rcv+0xce/0x1a0

Sep 29 16:26:16 linpear kernel: [<c05e0f99>] ? tcp_v4_rcv+0x6b9/0x7a0

Sep 29 16:26:16 linpear kernel: [<c05c2fc7>] ? ip_local_deliver_finish+0x97/0x220

Sep 29 16:26:16 linpear kernel: [<c05c2f30>] ? ip_rcv+0x320/0x320

Sep 29 16:26:16 linpear kernel: [<c05c298b>] ? ip_rcv_finish+0xfb/0x380

Sep 29 16:26:16 linpear kernel: [<c0540dae>] ? __netif_receive_skb+0x2fe/0x370

Sep 29 16:26:16 linpear kernel: [<c0542597>] ? netif_receive_skb+0x67/0x70

Sep 29 16:26:16 linpear kernel: [<c04e35fc>] ? handle_incoming_queue+0x17c/0x250

Sep 29 16:26:16 linpear kernel: [<c04e38ec>] ? xennet_poll+0x21c/0x540

Sep 29 16:26:16 linpear kernel: [<c0542d2a>] ? net_rx_action+0xea/0x190

Sep 29 16:26:16 linpear kernel: [<c0139cfc>] ? __do_softirq+0x7c/0x110

Sep 29 16:26:16 linpear kernel: [<c0139c80>] ? irq_enter+0x60/0x60

Sep 29 16:26:16 linpear kernel: <irq> [<c0139ade>] ? irq_exit+0x6e/0xa0

Sep 29 16:26:16 linpear kernel: [<c047829d>] ? xen_evtchn_do_upcall+0x1d/0x30

Sep 29 16:26:16 linpear kernel: [<c06c0947>] ? xen_do_upcall+0x7/0xc

Sep 29 16:26:16 linpear kernel: [<c01013a7>] ? hypercall_page+0x3a7/0x1000

Sep 29 16:26:16 linpear kernel: [<c0105c7f>] ? xen_safe_halt+0xf/0x20

Sep 29 16:26:16 linpear kernel: [<c010f41e>] ? default_idle+0x2e/0x60

Sep 29 16:26:16 linpear kernel: [<c0107f72>] ? cpu_idle+0x42/0x70

Sep 29 16:26:16 linpear kernel: [<c086977f>] ? start_kernel+0x2ce/0x2d3

Sep 29 16:26:16 linpear kernel: [<c08692ef>] ? kernel_init+0x112/0x112

Sep 29 16:26:16 linpear kernel: [<c086c943>] ? xen_start_kernel+0x4f7/0x4ff

Sep 29 16:26:16 linpear kernel: Mem-Info:

Sep 29 16:26:16 linpear kernel: DMA per-cpu:

Sep 29 16:26:16 linpear kernel: CPU 0: hi: 0, btch: 1 usd: 0

Sep 29 16:26:16 linpear kernel: CPU 1: hi: 0, btch: 1 usd: 0

Sep 29 16:26:16 linpear kernel: CPU 2: hi: 0, btch: 1 usd: 0

Sep 29 16:26:16 linpear kernel: CPU 3: hi: 0, btch: 1 usd: 0

Sep 29 16:26:16 linpear kernel: Normal per-cpu:

Sep 29 16:26:16 linpear kernel: CPU 0: hi: 186, btch: 31 usd: 47

Sep 29 16:26:16 linpear kernel: CPU 1: hi: 186, btch: 31 usd: 102

Sep 29 16:26:16 linpear kernel: CPU 2: hi: 186, btch: 31 usd: 170

Sep 29 16:26:16 linpear kernel: CPU 3: hi: 186, btch: 31 usd: 157

Sep 29 16:26:16 linpear kernel: active_anon:25003 inactive_anon:20896 isolated_anon:0

Sep 29 16:26:16 linpear kernel: active_file:29166 inactive_file:21213 isolated_file:0

Sep 29 16:26:16 linpear kernel: unevictable:0 dirty:40 writeback:0 unstable:0

Sep 29 16:26:16 linpear kernel: free:23517 slab_reclaimable:2127 slab_unreclaimable:1688

Sep 29 16:26:16 linpear kernel: mapped:8184 shmem:2983 pagetables:550 bounce:0

Sep 29 16:26:16 linpear kernel: DMA free:4952kB min:84kB low:104kB high:124kB active_anon:1252kB inactive_anon:892kB active_file:0kB inactive_file:0kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15808kB mlocked:0kB dirty:0kB writeback:0kB mapped:0kB shmem:0kB slab_reclaimable:24kB slab_unreclaimable:80kB kernel_stack:8kB pagetables:100kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no

Sep 29 16:26:16 linpear kernel: lowmem_reserve[]: 0 500 500 500

Sep 29 16:26:16 linpear kernel: Normal free:89116kB min:2816kB low:3520kB high:4224kB active_anon:98760kB inactive_anon:82692kB active_file:116664kB inactive_file:84852kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:512064kB mlocked:0kB dirty:160kB writeback:0kB mapped:32736kB shmem:11932kB slab_reclaimable:8484kB slab_unreclaimable:6672kB kernel_stack:984kB pagetables:2100kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no

Sep 29 16:26:16 linpear kernel: lowmem_reserve[]: 0 0 0 0

Sep 29 16:26:16 linpear kernel: DMA: 274*4kB 152*8kB 89*16kB 26*32kB 6*64kB 0*128kB 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB = 4952kB

Sep 29 16:26:16 linpear kernel: Normal: 171*4kB 3764*8kB 2923*16kB 353*32kB 4*64kB 0*128kB 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB = 89116kB

Sep 29 16:26:16 linpear kernel: 57321 total pagecache pages

Sep 29 16:26:16 linpear kernel: 3950 pages in swap cache

Sep 29 16:26:16 linpear kernel: Swap cache stats: add 46723, delete 42773, find 336587/339261

Sep 29 16:26:16 linpear kernel: Free swap = 217200kB

Sep 29 16:26:16 linpear kernel: Total swap = 262140kB

Sep 29 16:26:16 linpear kernel: 133104 pages RAM

Sep 29 16:26:16 linpear kernel: 0 pages HighMem

Sep 29 16:26:16 linpear kernel: 5833 pages reserved

Sep 29 16:26:16 linpear kernel: 51022 pages shared

Sep 29 16:26:16 linpear kernel: 78602 pages non-shared</c086c943></c08692ef></c086977f></c0107f72></c010f41e></c0105c7f></c01013a7></c06c0947></c047829d></c0139ade></irq></c0139c80></c0139cfc></c0542d2a></c04e38ec></c04e35fc></c0542597></c0540dae></c05c298b></c05c2f30></c05c2fc7></c05e0f99></c05e080e></c057fb3c></c05d9a1b></c05ddcd4></c05dd965></c05daf25></c05c7568></c05c83ca></c05c815b></c05439d9></c0557a62></c05c8020></c05c8020></c05436dd></c0607ad3></c053ad3d></c066530d></c053a7fe></c01b282d></c01b2726></c01b22b3></c018baa4></c018b258>

My question- does the 3.0 kernel deal with swappiness differently than the 2.6 series? It seems to be using swap even when it doesn't need it. I do have the folowing entry in my sysctl.conf:

vm.panic_on_oom=1

kernel.panic=10

vm.swappiness=25

??~~

9 Replies

This post

At least I know I didn't link to the wrong post

Can you see, from syslog, if the errors were occuring at times of heaving network activity? On my machine it seems to coincide with an rsync kicking off…

I'm also running Debian Squeeze 32bit on a 512mb Linode (latest 3.0 kernel (3.0.4-linode38).

Here's an example error from my kern.log:

It's driving me mad trying to figure out why my linode is going into swap and behaving like it's OOM when I have so much free memory.

Happens on real hardware, too:

rtucker@witte:~$ free -m

total used free shared buffers cached

Mem: 11662 11269 393 0 158 2613

-/+ buffers/cache: 8498 3164

Swap: 12287 379 11908

After dumping out the swap (swapoff -a, swapon -a), I have found that the kernel WILL swap stuff out to make room for caching when a lot of I/O or memory allocation is going on. Here's one of my more I/O-intensive nodes:

rtucker@framboise:~$ uname -a

Linux framboise 3.0.4-linode38 #1 SMP Thu Sep 22 14:59:08 EDT 2011 i686 GNU/Linux

rtucker@framboise:~$ free -m

total used free shared buffers cached

Mem: 497 454 42 0 23 267

-/+ buffers/cache: 163 333

Swap: 255 122 133

rtucker@framboise:~$ vmstat 1

procs -----------memory---------- ---swap-- -----io---- -system-- ----cpu----

r b swpd free buff cache si so bi bo in cs us sy id wa

0 1 125524 43672 24168 273884 0 1 1 1 0 1 2 1 94 4

0 0 125524 44664 24176 273948 0 0 0 40 1489 614 0 0 96 4

0 0 125524 44788 24184 273968 0 0 16 40 3669 1788 0 0 97 3

0 0 125524 44788 24188 273968 0 0 0 1 2510 1186 0 0 99 0

0 0 125524 46144 24196 274072 0 0 148 0 1205 345 0 0 97 3

0 0 125524 46144 24212 274100 0 0 0 52 959 328 0 0 100 0

0 0 125524 46144 24212 274108 0 0 0 0 848 275 0 0 100 0

0 1 125524 46184 24224 274096 0 0 16 0 884 321 0 0 99 1

0 0 125524 45316 24224 274272 0 0 160 0 1069 315 0 0 99 0

0 0 125524 45316 24224 274276 0 0 0 0 920 319 0 0 100 0

0 1 125524 46184 24244 274384 0 0 112 500 1199 444 0 0 93 6

[Disabled swap: swapoff -a]

1 1 121028 42300 24256 275912 4156 0 4264 68 3536 4025 2 1 68 29

1 0 116796 38952 24256 277440 3368 0 3368 0 5368 4429 0 1 79 20

0 1 113376 36720 24256 277572 2176 0 2176 0 3264 2763 0 2 75 23

0 1 109728 33372 24256 277588 3356 0 3356 0 2359 2804 0 1 79 19

0 1 100212 26676 24256 278632 6656 0 6656 128 3095 5267 0 3 78 19

0 1 98068 24692 24256 278596 2088 0 2088 0 1918 1860 0 2 80 18

0 1 90796 22708 24264 279228 2020 0 2020 36 1936 1840 1 1 75 23

0 1 89332 22212 24264 279364 440 0 440 0 1281 624 0 0 78 21

0 1 84716 19648 24264 279560 2676 0 2676 0 2009 2291 0 2 77 21

0 1 82820 17664 24280 280068 1860 0 1860 720 1838 1718 1 1 77 21

procs -----------memory---------- ---swap-- -----io---- -system-- ----cpu----

r b swpd free buff cache si so bi bo in cs us sy id wa

1 1 75676 10720 24280 280696 6896 0 6896 0 3612 5616 0 3 75 22

1 1 72304 25852 18732 266940 3340 0 3368 0 5735 4541 1 2 77 19

0 1 68320 22616 18756 267556 3628 0 3716 84 3993 3971 1 1 77 20

1 0 63076 18772 18756 268584 3796 0 3796 0 2391 3154 0 2 78 21

0 1 54536 78260 8820 212820 6864 0 7199 40 3296 5505 0 3 77 20

0 1 51172 74912 8820 213216 3260 0 3284 0 2137 2733 0 1 75 24

0 1 47516 71440 8820 213636 3504 0 3560 0 2173 2914 0 1 77 22

0 1 41004 64992 8828 214008 6384 0 6384 48 3066 5074 0 2 78 20

0 1 33372 57428 8828 216384 7520 0 7520 0 3259 5893 0 2 77 21

0 1 29028 53212 8828 216604 4240 0 4240 3 2484 3453 0 2 78 20

0 1 26272 50556 8828 216880 2740 0 2740 0 2517 2503 0 1 77 22

0 2 24352 48700 8832 217024 1856 0 1856 336 4382 3089 0 1 77 22

1 0 23292 47584 8860 217236 1056 0 1096 532 2900 1929 0 1 75 25

0 2 21444 45080 8904 217876 1800 0 2076 0 2262 1894 1 1 72 27

0 1 16956 41336 8920 218428 4364 0 4508 60 2947 3807 1 2 76 21

0 1 9816 34392 8920 222188 6988 0 6988 0 3326 5544 0 2 78 20

0 1 2060 28316 8920 223320 6064 0 6064 0 2824 4821 0 2 78 20

0 0 0 25828 8956 224140 1836 0 2656 0 2160 1918 0 1 79 20

0 0 0 25844 8956 224228 0 0 0 0 793 260 0 0 100 0

0 0 0 25844 8964 224224 0 0 0 68 826 272 0 0 100 0

0 0 0 25844 8964 224228 0 0 0 0 1008 441 0 0 100 0

[Re-enabled swap: swapon -a]

[... 20 seconds of boring ...]

procs -----------memory---------- ---swap-- -----io---- -system-- ----cpu----

r b swpd free buff cache si so bi bo in cs us sy id wa

1 0 0 22116 9148 226220 0 0 0 0 2364 1070 0 0 100 0

0 0 0 22172 9148 226220 0 0 0 0 932 281 0 0 100 0

1 0 0 22172 9156 226216 0 0 0 41 904 325 0 0 100 0

0 0 0 22172 9156 226220 0 0 0 0 644 234 0 0 100 0

0 0 0 22172 9156 226220 0 0 0 0 849 299 0 0 100 0

[munin-update starts polling 9 nodes]

1 0 0 21156 9164 226212 0 0 8 44 1051 544 2 0 95 3

0 3 0 21240 9172 226248 0 0 40 0 1108 376 0 0 90 9

0 2 0 20084 9208 226556 0 0 300 640 1225 496 0 0 50 49

0 3 0 13016 9268 227192 0 0 656 0 2081 687 2 1 73 23

0 4 10340 34672 8428 201444 0 10340 636 10344 3770 1670 4 3 68 18

1 2 12504 50420 8312 196256 96 2328 1270 2356 2677 1333 1 1 54 44

0 1 12496 50844 8312 196652 0 0 436 0 1534 469 0 0 76 24

0 1 12496 50720 8312 196872 0 0 204 4 977 338 0 0 74 25

1 0 12456 47420 8344 198308 28 0 1528 48 1640 477 6 0 80 13

0 1 12452 34180 8348 198504 128 0 372 0 1620 467 4 2 61 32

2 2 12432 26948 8396 199396 228 0 1032 44 2210 798 1 1 69 28

0 2 12404 23476 8460 199848 64 0 580 0 2074 646 2 1 57 39

0 7 12316 23120 8472 200120 8 0 292 12 1157 389 1 0 57 41

0 5 12308 22608 8476 200272 28 0 116 168 1617 633 1 0 53 46

1 7 12228 21180 8496 200308 32 0 172 236 4177 2053 3 1 34 59

1 5 12144 20476 8544 200832 32 0 392 164 2963 1210 2 2 51 41

procs -----------memory---------- ---swap-- -----io---- -system-- ----cpu----

r b swpd free buff cache si so bi bo in cs us sy id wa

3 2 12064 20316 8588 201436 64 0 700 76 2232 1049 6 1 64 27

0 3 12064 20060 8612 201512 0 0 180 340 1994 592 2 1 65 31

0 0 12064 19052 8628 202488 0 0 860 84 1474 554 0 0 72 27

0 0 12064 19068 8636 202688 0 0 264 0 2268 812 2 2 84 12

1 0 12064 18448 8648 202836 0 0 180 0 3161 1013 2 3 85 10

[...]

As you can see, despite having plenty of free RAM, it immediately dumped about 12 MB to swap the moment things got busy. It will do that. This particular task occurs every five minutes, and if it can move something that is only accessed every fifteen minutes to swap so that it doesn't have to hit the disk to read something it needs every five minutes, it is a win. (It takes a little while for it to reach a steady state.) There's also some memory allocation going on here, which results in a direct pressure on the memory system, but I've seen it happen with small processes, too.

Yes, there was a sudden shift in memory usage, and yes, there was high I/O wait. However, in the long run, swapping results in decreased I/O, and therefore lower I/O wait.

Really weird, but amazing to watch in real time.

~~

~~

Is this symptomatic of a memory leak? For the moment I'm going to switch pm to static with a low number of max_children to see if I can get memory consumption under control.~~